Today marks the release of a new automated marketplace moderation script, a tool that fundamentally changes how decentralized marketplaces handle content moderation. This script combines local LLM technology with Particl's existing moderation framework to create an automated system that maintains our core values of privacy and decentralization while significantly reducing the burden on users.

Built using Python, this new solution operates entirely on your local device. It analyzes marketplace listings using locally-run, lightweight LLM models, requiring no external API calls or interaction with third-party services. Your data stays on your machine, with the script establishing connections exclusively to Particl Marketplace itself. The application runs on virtually any computing environment — from powerful workstations to modest setups like Raspberry Pis or virtual machines, supporting Windows, MacOS, and Linux operating systems.

You can access the script and its documentation through our Github repository: https://github.com/cryptoguard/particl-market-moderation-script

How We Got Here: The Current Moderation System

This release builds on top of Particl Marketplace's initial moderation system (v0.1), a decentralized approach that puts moderation control directly in users' hands. This system has served the marketplace well since launch, but as the platform grew, we identified opportunities to improve its efficiency and pro-activity while maintaining its decentralized nature.

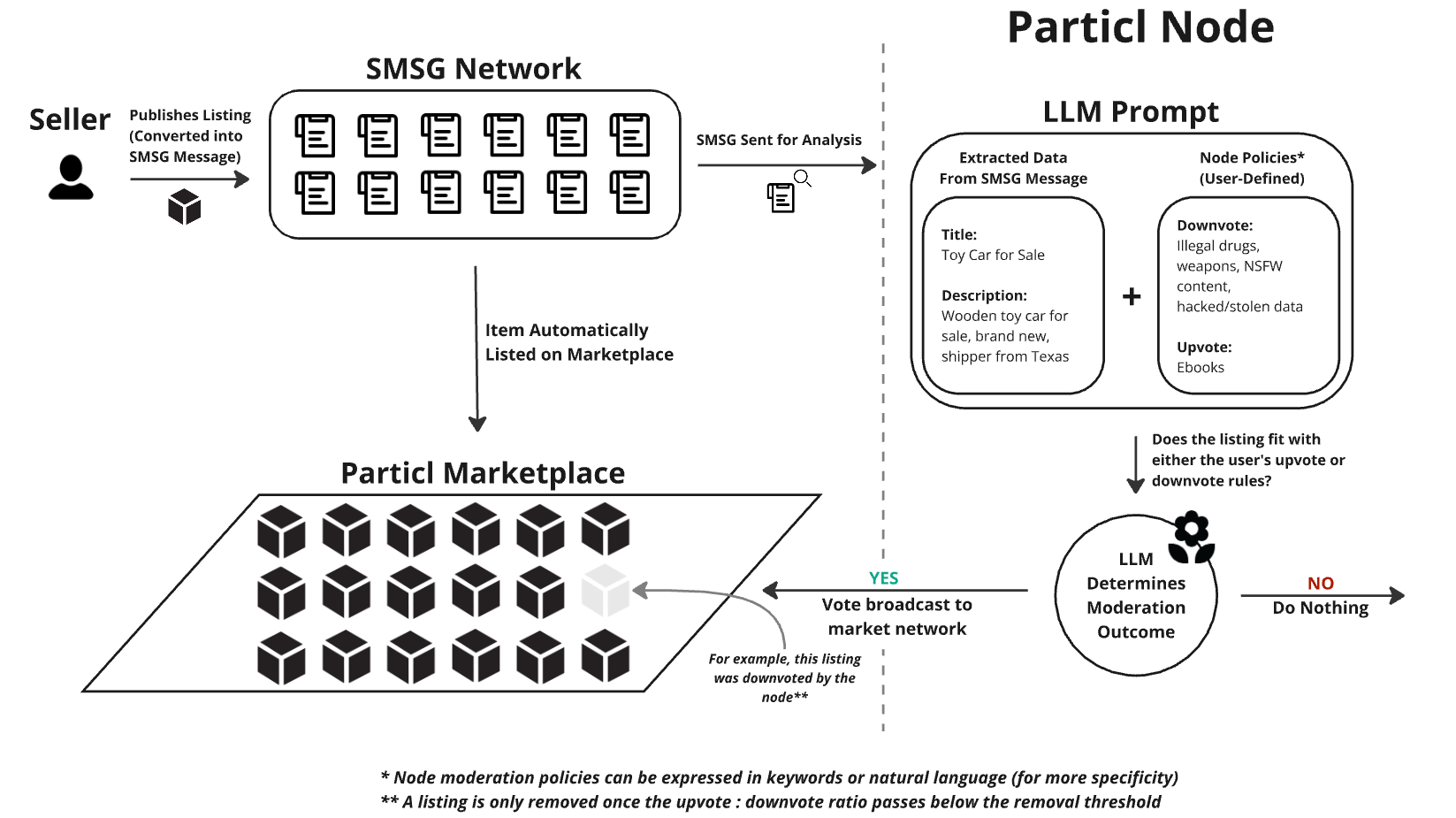

The current system implements a sophisticated voting mechanism that shares some conceptual similarities with Reddit's upvote/downvote system, but with crucial differences that make it suitable for a decentralized environment. When users encounter potentially problematic listings, they can flag them, directly on the application, for community review. These flagged items then appear on a public list where the community can either support the original flag through additional downvotes or contest it with upvotes.

Content removal follows strict mathematical thresholds rather than arbitrary decisions. A listing is only removed when its negative votes exceed 0.1% of Particl's total coin supply. For example, assuming a total Particl coin supply of 15,000,000 coins, a listing requires a net negative vote weight of 15,000 coins to be removed.

Importantly, votes aren't calculated on a one-user-one-vote basis. Since Particl's decentralized architecture makes it trivial to create multiple addresses, the system instead weighs votes based on the number of coins in the voting wallet. This prevents gaming the system, as coins can't be fabricated within an address. The more stake you hold, the more influence your vote carries.

Addressing Current Limitations

While this system has proven effective at maintaining marketplace standards, its continued usage has highlighted two significant challenges:

First, the system requires constant attention from active users to maintain effective moderation. This creates an ongoing burden on the community and can lead to inconsistent moderation coverage during periods of lower user activity.

Second, and more critically, the reactive nature of the system means users must be exposed to potentially undesirable content before they can vote to remove it. While early adopters and technology enthusiasts might accept this as a necessary trade-off to enjoy unapologetic decentralization, it presents a significant barrier to broader adoption, particularly among more mainstream users.

These challenges demanded a solution that could maintain our commitment to decentralization and privacy while reducing the burden on users and improving the marketplace experience.

The Promise of Local LLM Technology

The breakthrough came with recent advances in Large Language Model technology. While the spotlight often falls on centralized services like OpenAI's ChatGPT, Anthropic's Claude, or Google's Gemini, they conflict with Particl's privacy-focused ethos and pose scaling challenges; more listings would mean higher total API costs.

Indeed, the real innovation for our purposes has been in local and open models. These models can now run effectively on personal devices while achieving accuracy rates comparable to their cloud-based counterparts, all without ever connecting to third-party service providers.

This technological advancement presented an opportunity to significantly improve Particl Marketplace’s moderation system with context-based analysis without compromising on core principles. While using centralized LLM services through APIs would have conflicted with Particl's privacy-focused ethos and introduced scaling challenges, local models offered a perfect fit for our needs.

The New Automated Moderation Script

We’re proud to introduce, today, a new script that automates the process of moderating Particl Marketplace content with minimal user input.

Operating Modes

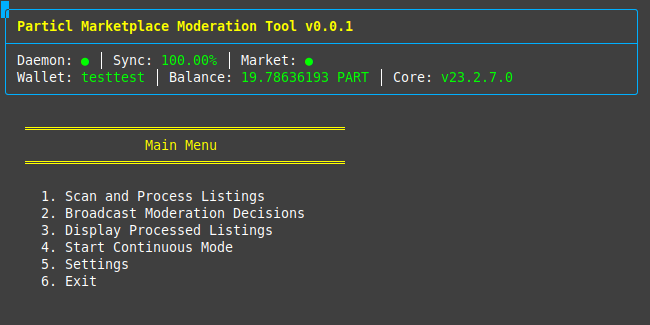

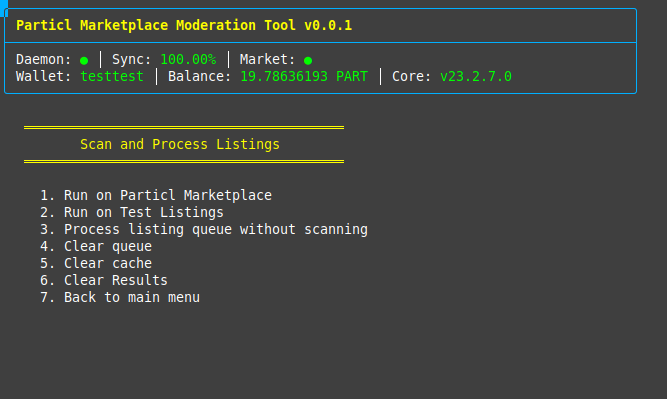

The script offers two distinct approaches to marketplace moderation: manual mode and continuous mode.

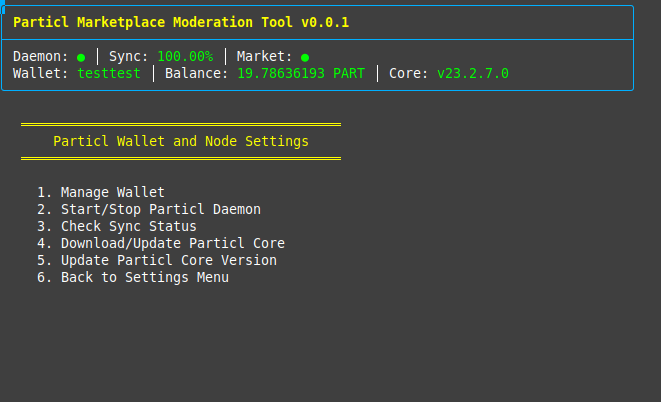

In manual mode, you maintain complete control through a CLI menu interface that lets you oversee each step of the process. This gives you the ability to review and adjust moderation decisions before they reach the network, making it especially valuable during your initial setup phase or when testing new moderation rules.

Continuous mode transforms this process into an autonomous operation. The script independently cycles through scanning the marketplace for new listings, analyzing each one, applying moderation decisions, and broadcasting votes to the network. After completing this sequence, it begins anew with another marketplace scan. While this mode significantly improves moderation efficiency and reduces user exposure to undesirable content, we strongly recommend implementing it only after thoroughly testing your rules in manual mode.

Rule System and Analysis

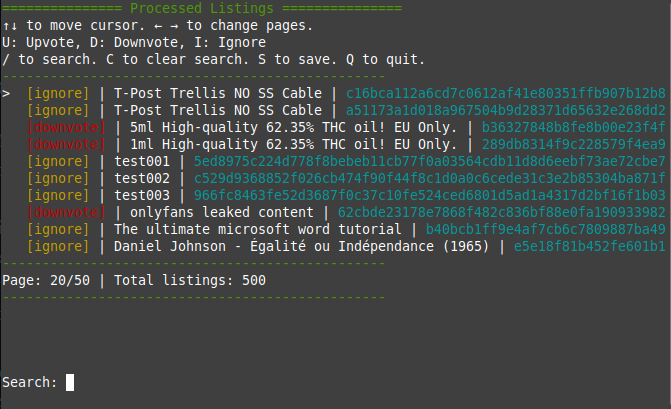

At the heart of this script's moderation process lies a sophisticated rule system powered by local LLM models. These models continuously monitor marketplace activity, evaluating new listings against your predefined criteria. Each rule can trigger one of three actions: downvote, upvote, or ignore, giving you precise control over the moderation process.

The system accepts rules in both keyword format and natural language descriptions, offering flexibility in how you define your moderation parameters. To help you get started, we've included a comprehensive set of template rules accessible through the CLI menu. These templates serve dual purposes — providing immediate functionality and demonstrating effective rule construction. You can use them as-is, modify them to better align with your specific needs, or entirely replace them with custom rules of your own.

For those interested in creating custom rules, we've included a diverse set of test listings spanning various categories — from standard marketplace items to prohibited products and contentious cases designed to put custom rules to the test. This test dataset proves invaluable in evaluating and refining your rules before deploying them in the live marketplace.

The Judgement Engine

The judgement system employs a carefully designed approach to content analysis. The local LLM model examines both listing titles and descriptions, but with an important distinction in its methodology. Rather than using potentially biased terms like 'illegal', 'remove', or ‘unethical’ when classifying, it categorizes listings using neutral terms such as 'true', 'false', or 'ignore'. This neutral framework ensures the model remains flexible and unbiased, capable of handling highly specific custom rules without predetermined assumptions.

Model Selection and Performance

After extensive evaluation of numerous local models, Gemma2 (2B) emerged as the optimal choice. Despite its compact size, this model consistently demonstrates remarkable accuracy, frequently outperforming models 50 times larger — including several state-of-the-art options like ChatGPT (these large models often being victim of strong bias during training). Gemma’s efficient architecture allows it to run effectively on modest hardware configurations, from Raspberry Pis to virtual private servers.

Our commitment to improvement continues as we evaluate new models, making effective alternatives available through the script’s CLI menu as they emerge. We're also exploring specialized fine-tuning opportunities for Gemma2:2b to further optimize its marketplace moderation capabilities, although it remains unclear exactly what sort of performance boost that will grant the model, if any.

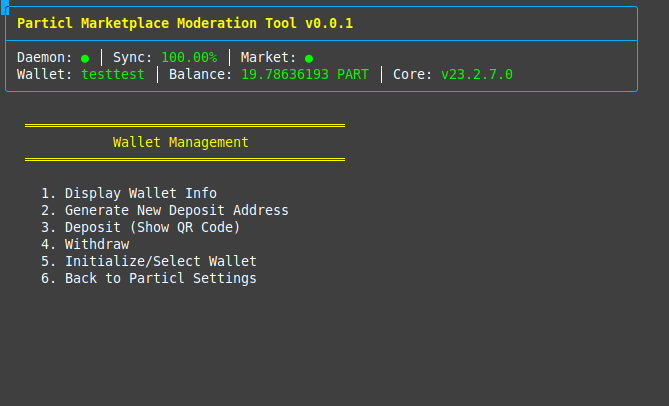

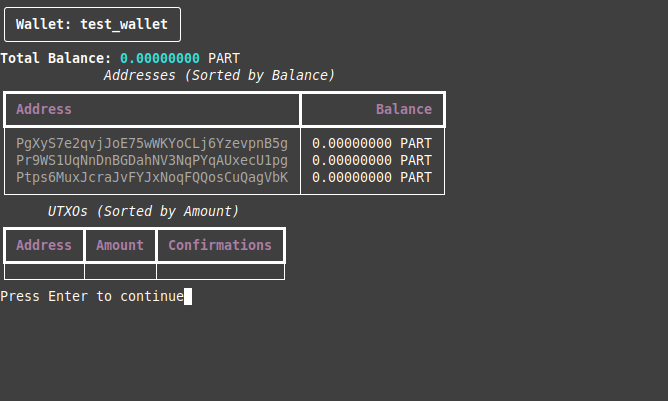

Wallet Configuration

To operate effectively, the moderation script requires two fundamental components: an active Particl node (particld) and a Particl wallet containing coins. Setting up your wallet environment can be accomplished in two ways.

The first approach utilizes the CLI menu's built-in wallet creation tool, which streamlines the process for new users. Alternatively, if you already maintain a Particl wallet, you can integrate it with the script. This process involves specifying your wallet's directory path in the config/config.yaml file and initializing it through the script's settings menu (Settings/Particl Wallet and Node Settings/Initialize Wallet).

The initialization process serves a crucial function beyond basic setup. It incorporates the marketplace's key and address into your SMSG database, enabling secure message encryption and decryption. This integration proves essential for both accessing marketplace content and participating in the voting system. Without proper initialization, you won't be able to decrypt marketplace listings or cast votes on content.

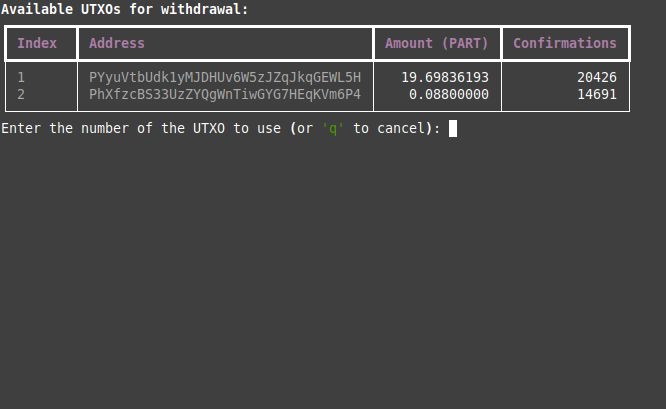

Vote Broadcasting System

The process of broadcasting votes to the network follows a structured approach designed to ensure accuracy and intentionality. When running the script for the first time with new moderation policies, we recommend a thorough review of the LLM's decisions. Access these through the "Display Processed Listings" menu, where you can examine each decision in detail.

During this review phase, you maintain full control to modify any automated decisions that don't align with your intentions. This verification step proves particularly valuable when working with new rule sets or in markets with unique content patterns.

Once satisfied with the moderation decisions, you can initiate the broadcasting process through the CLI menu. Remember that this operation requires coins in your wallet, as the weight of each vote is measured by the number of coins contained in that wallet. The script manages these transactions automatically, ensuring efficient use of your wallet's resources while maintaining the integrity of your voting decisions.

Frequently Asked Questions

Q: Can I use this application without running a local model?

A: Not currently. While we initially explored PetalsLLM, a decentralized GPU network running open models, we ultimately decided against including this option in the final release. Despite its promising concept, limitations in GPU provider availability made consistent uptime impossible. Additionally, the community-hosted models didn't meet the specific marketplace moderation performance requirements. While we continue monitoring developments in this space for future alternatives, we've found that gemma2:2b offers an excellent compromise — though it may process content more slowly on smaller devices, its minimal resource requirements make it viable even on modest hardware like Raspberry Pis, small VMs, and VPS servers.

Q: How is my data and privacy protected?

A: Privacy protection is fundamental to this script's core design. The script connects exclusively with the marketplace, eliminating third-party data collection risks. We've implemented vote anonymization through decision encoding, making it impossible for outside observers to determine which addresses cast specific votes. This approach aligns with Particl's core privacy and data security principles, which guided the script's development.

Q: What happens if the LLM makes a mistake on a listing?

A: Given LLMs' deterministic nature, occasional errors may occur. This is why we emphasize thorough testing using the provided test listings before deploying new moderation policies. We strongly recommend against using continuous mode until you're fully satisfied with your custom rules' performance. Manual mode provides an excellent environment for fine-tuning rules and understanding how the LLM interprets different content types.

Q: Is the script compatible with Windows, MacOS, and Linux?

A: Yes. We've designed the app with cross-platform compatibility as a priority. Built using Python, it runs on any system supporting Python 3.8 or higher. The installation script creates a dedicated virtual environment and handles all dependency management, ensuring the app won't conflict with your system's existing Python packages or other software.

Q: What happens if my device loses power or the script crashes during continuous mode?

A: The script maintains queue files of processed listings and votes, allowing it to resume operations seamlessly after any interruption. When restarted, it will automatically scan for any listings it might have missed during the downtime, ensuring no content goes unprocessed.

Q: How much storage space does the local LLM model require?

A: The Gemma2 (2B) model requires approximately 1.6GB of storage space. The script's other components, including the Python environment and dependencies, need roughly 500MB. Particl-related files (e.g., blockchain) will require about 2.5 GB. Therefore, we recommend having at least 6GB of free storage space to accommodate the model, script, and temporary files generated during operation.

Q: What are the minimum hardware requirements to run the script effectively?

A: For basic operation with reasonable performance, we recommend:

- RAM: 4GB minimum (8GB recommended for smoother operation)

- Storage: 6GB free space

- Internet connection: Standard broadband for marketplace connectivity

Note: The script might run on lower specifications, but processing speed may be significantly reduced.

Q: How does the script handle network connectivity issues?

A: The script easily handles network interruptions. If connection to the marketplace is lost, it will pause operations and attempt to reconnect at regular intervals. Any votes queued during this period are safely stored locally. Once connectivity is restored, the script resumes operation and processes any listings it missed during the downtime.

Q: Can multiple instances of the script run simultaneously on different devices?

A: Yes, you can run multiple instances across different devices, each with their own wallet and rule set. This can be useful for providing redundancy. Each instance operates independently and contributes to the overall voting pool according to its wallet's stake.

Q: How can I backup my moderation rules and configuration?

A: The app stores all rules, configurations, and listing data in the config directory. We recommend regularly backing up both the config.yaml file and the rules directory. These can be restored on another installation of the script or used to recover your setup if needed.

Q: Is there a way to test my rules against historical marketplace data?

A: No, Particl Marketplace does not keep information about its listings beyond the expiration point of their associated SMSG messages. To test on more listings than what is currently available on the marketplace, please use the provided test listings (available from the CLI menu).

Q: How does the script handle updates to the LLM model?

A: LLM models are managed by the Ollama protocol, which is installed and initiated as part of the setup process. When new model versions become available, they can be downloaded through the script's menu system (provided that you’ve run `git pull` to update your moderation app in the first place).

Looking Forward

The release of this automated moderation script marks a significant milestone in decentralized marketplace technology. It demonstrates that we can achieve effective content moderation while staying true to the principles of privacy, decentralization, and user autonomy.

This development opens up new possibilities for decentralized platforms. It shows that we can address complex challenges like content moderation while minimizing bias through technological innovation rather than centralized control. The script's ability to run locally, its customizable rule system, and its privacy-preserving design creates a framework that could benefit other decentralized projects facing similar challenges.

Looking ahead, we're committed to continuing development based on community feedback and technological advances. We're particularly excited about the potential for improved local LLM models, community-contributed rule templates, and further optimizations such as fine-tuning that could make the script even more efficient and user-friendly.

Your feedback and contributions will be crucial in shaping the future of this tool. Whether you're a marketplace vendor, a buyer, or simply interested in decentralized technologies, we encourage you to try the script, experiment with different rules, and share your experiences with the community.

The Open-Source Revolution

We're on a mission to create a private, independent, and pro-liberty digital economy that is fair and open to all. Learn more about what we do at any of the following links.

Be a part of the movement and join us in the fight for our freedoms by meeting the community and spreading the word far and wide!

Discord Telegram Element / Matrix

Learn more about Particl with these in-depth resources.

Website BasicSwap DEX Academy Wiki

Follow the link below to get a list of all other useful Particl-related links you may find helpful.